Artificial intelligence is likely to transform the public sector by automating many government tasks—including making combat decisions. But, according to experts at a recent symposium held at Harvard University, this “over-the-horizon” technology can only guide and inform government leaders. There will always be a need for human decision making—and for clear ethical standards to prevent harmful intentions.

Artificial intelligence is likely to transform the public sector by automating many government tasks—including making combat decisions. But, according to experts at a recent symposium held at Harvard University, this “over-the-horizon” technology can only guide and inform government leaders. There will always be a need for human decision making—and for clear ethical standards to prevent harmful intentions.

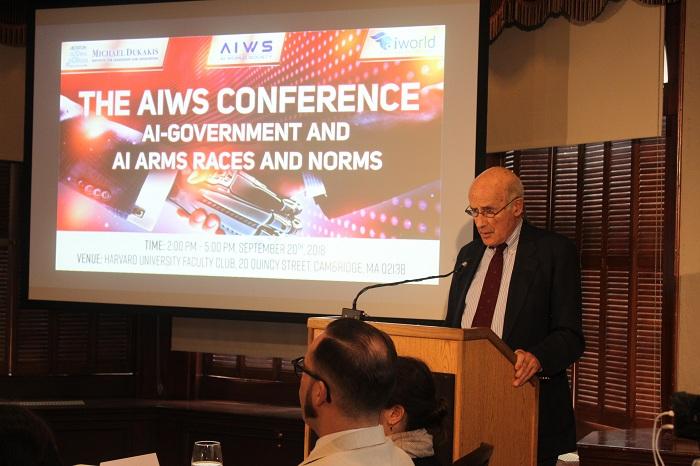

At the September 20 conference, “AI-Government and AI Arms Races and Norms,” organized by the Michael Dukakis Institute (MDI), Professor Marc Rotenberg underscored the growing gap between informed government decision-making and the reality of our technology-driven world. “Governments may ultimately lose control of these systems if they don’t take action,” he told some 60 attendees.

Rosenberg, who teaches at Georgetown University Law School, is President of the Electronic Privacy Information Center (EPIC), and a member of the AI World Society Standards and Practice Committee,

Prof. Matthias Scheutz, Director of the Human-Robot Interaction Laboratory at Tufts University, said the greatest risk caused by AI and robotics technologies is when unconstrained machine learning is out of control. This can happen when AI systems acquire knowledge and start to pursue goals that were not intended by their human designers, he said. For example, “If an AI program operating the power grid decides to cut off energy in certain areas for better power utilization overall, it will leave millions of people without electricity, which consequently turns out to be an AI accidental failure.”

Prof. Matthias Scheutz, Director of the Human-Robot Interaction Laboratory at Tufts University, said the greatest risk caused by AI and robotics technologies is when unconstrained machine learning is out of control. This can happen when AI systems acquire knowledge and start to pursue goals that were not intended by their human designers, he said. For example, “If an AI program operating the power grid decides to cut off energy in certain areas for better power utilization overall, it will leave millions of people without electricity, which consequently turns out to be an AI accidental failure.”

Scheutz also said that common preventive solutions inside and outside the system are largely insufficient to safeguard AI and robotics technologies. Even with “emergency buttons,” the system itself might finally set its own goal to prevent a shutdown previously set up by humans.

The best way to safeguard AI systems is to build ethical provisions directly into the learning, reasoning, recognition and other algorithms. In his presentation, he demonstrated “ethical testing” to catch and handle ethical violations.

Here’s a link to video of Scheutz’s talk. https://youtu.be/66EeYzkTxwA

Prof. Joseph Nye, emeritus of Harvard University, who created the concept of “Soft Power” diplomacy, focused on the expansion of Chinese firms in the US market and their ambition to surpass the US in AI. Nye said the notion of an AI arms race and geopolitical competition in AI can have profound effects on our society. However, he added, predictions that China will overtake the US in AI by 2030 are “uncertain” and “indeterminate” because China’s only advantage is having more data and little concern about privacy.

Nye also point out that as people unleash AI, which is leading to warfare and autonomous offensives, we should have treaties in place to control the technology, managed perhaps by international institutions that will monitor AI programs in various countries.

During the symposium, Tuan Nguyen and Michael Dukakis, cofounders of the Michael Dukakis Institute (MDI), announced MDI’s cooperation with AI World–the industry’s largest conference and expo covering the business and technology of enterprise AI, to be held in Boston December 3-5, 2018.

During the symposium, Tuan Nguyen and Michael Dukakis, cofounders of the Michael Dukakis Institute (MDI), announced MDI’s cooperation with AI World–the industry’s largest conference and expo covering the business and technology of enterprise AI, to be held in Boston December 3-5, 2018.

Nguyen said, “Our cooperation marks the determination between two organizations toward achieving the goal of developing, measuring, and tracking the progress of ethical AI policy-making and solution adoption by governments and corporations.” Nguyen also introduced Eliot Weinman – Chairman of AI World Conference and Expo as a new member of AIWS Standards and Practice Committee.

Conference details are published in the current issue of AIWS Weekly.

–Dick Pirozzolo

New Cambridge Observer is a publication of the Harris Communications Group, a PR, content and digital marketing firm based in Cambridge, MA.

Dick Pirozzolo is a member of the Group; the Michael Dukakis Institute, formed by Boston Global Forum, is his client.